Did you know that with the same question/data you can also run…

- A TURF analysis to identifying the optimal mix to market, or

- A segmentation to identify core target groups?

That’s right max diff is awesome!!!

In case you’re not familiar with it, we’ll recap traditional max diff before we talk about all the other cool stuff you can do with it…

What is Max Diff?

MaxDiff is an analytical approach for obtaining preference/importance scores for multiple items, sometimes referred to as best-worst scaling, it’s essentially a ranking exercise that puts other ranking exercised to shame. It can be used to assess (pretty much anything you want a hierarchy for!)

- Brand preference

- The appeal of product features

- Consumers most preferred flavours

- What are the best rewards we can offer?

- What’s most important when choosing where to shop?

- What messaging will resonate most with our customers/ those we want to be customers?

- What’s important when choosing where to live?

- What should we have on our menu?

- What services should a bank offer it’s customers?

- What environmental initiatives do our customers think we should develop first?

- What’s most important when booking a holiday?

Over the past few years we’ve run hundreds of Max Diffs, probably on every topic going!

How does it work?

Instead of asking people to look at many items and rate each one (everything could end up as equally important) or asking respondents to take a list of 10/15/20 things and put them in order of importance (that’s a very difficult and time-consuming task even for the most dedicated respondent!) in a Max-Diff exercise, respondents will be simply be presented with a small subset of the items and asked which of the items shown is the most important or appealing to them and which is the least…

Imagine you were <insert context of question>…which of the following <features/benefits/messages/products> would be most <appealing/important> and which would be the least *<appealing/important>*/?

It’s much easier to decide between just 4 items!

Having seen several screens like the above (with the items shown determined by an algorithm) we are then able to use statistical modelling (hierarchical Bayes (HB) methodology) to robustly quantify the hierarchy of importance or appeal at an overall level and for each of our respondents.

The way that Max Diff gets respondents to trade benefits off against each other leads to a much more differentiating measure of importance or appeal than traditional rating scales or rankings.

Should I Anchor my Max Diff?

An ‘anchored max diff’ works the same as the traditional max diff above, but at the end of the exercise each respondent is presented with all of the items in turn and asked to indicate which ones “would definitely motivate them to take action”.

This one additional question frames the exercise by establishing what will and won’t work for the business

Because we have asked the question to identify those items that are truly motivating, we can differentiate in our hierarchy between those messages that will engage customers and those that won’t (in practical terms it means the analysis draws a line in our results, and anything above the line will result in action from our target market and anything below the line won’t).

I’ve heard about Express Max Diff & Bandit Max Diff, what are they?

Recently new developments in the world of max diff that allow us to push the parameters of what we can include even further. Whilst a traditional max diff can easily deal with 20 items, copes well up to 30 items and has been often been pushed as far as 40 items, the more we put into it the more screens we need each respondent to see, which can be problematic in an already long survey (even for panellists!)

You’d be surprised how many clients come to us needing to test 60, 80 or even 100 items!

Both of these techniques allow us to test larger number of items without making our respondents want to cry!

In the case of express max diff, not every respondent sees all of the items, rather just a subset, and the analysis borrows more information from its observations of other respondent’s behaviour in relation to items that overlapped

And in the case of bandit max diff, the software learns from early respondents and shows preferred items more frequently to subsequent respondents ensuring a robust model at the top end of the hierarchy but a slightly ‘wobblier’ model when it comes to the less important items.

However, both have some practical implications for implementation and some caveats for the use of the results, so please do get in touch if you want to know more!

What was that you said about TURF Analysis?

Whilst the Max Diff analysis will clearly identify those products that are most appealing and those that are least appealing, not all potential customers will find the same products/features/messages appealing.

If we simply pick the top 2 or 3 it is likely that they will have a similar essence and will appeal to the same people. As a result our decisions of what to use might not appeal to as wide an audience as possible.

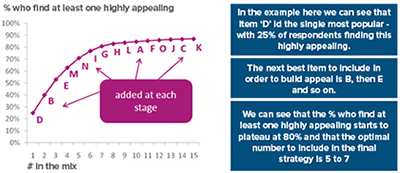

TURF (Total Unduplicated Reach & Frequency) Analysis to identify the optimal combination of 2, 3, 4… products/features/messages that could be adopted in order to maximise the appeal to as wide an audience as possible.

And a segmentation you say?

Yep! Segmentation too…

In addition to analysing respondent choices to the max diff exercise in the normal manner; we can also use them to identify segments of respondents having similar preferences/needs. To do this we use Latent Class Analysis, a type of analysis particularly effective for use with choice task data.

Because the input is based on respondents needs and is collected in a manner that forces differentiation in the results from an individual respondent, we find that the solutions are often clearer ones based on other data types (including agree/disagree scales) ultimately giving us more powerful segments to use as a business. Once identified these needs-based segments can be profiled on the max diff itself as well as all other key questions in the survey.

Furthermore, at Boxclever we have developed a proprietary technique to create a golden question algorithm for our segmentations, ensuring we can profile customers or respondents in other surveys into these groups. In-fact when this technique was tested against more traditional discriminant analysis, we found 15-20%-point improvement in in the number of respondents assigned correctly!

So, in conclusion, one simple question technique can give us loads of awesome outputs… MAX DIFF RULES!!